Have you ever opened a textbook on math or machine learning, and it made you feel like this guy?

It’s not often that I hear someone ecstatic about the number of symbols in math formulas.

But machine learning math only looks intimidating because it’s written in a very serious font, with very serious Greek letters, and absolutely no sense of humor.

But behind every formula is a very normal idea.

Let’s take some very common ML formulas and “humanize” them.

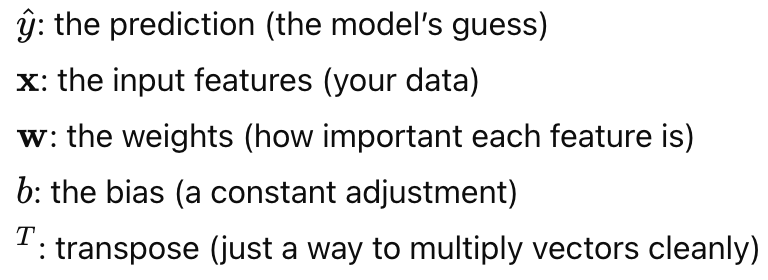

1️⃣ Linear Regression

In Plain English: “Given what I know, here’s my best guess.”

What It Actually Does:

Linear Regression is just trying to draw the best-fitting straight line through your data. It takes some inputs, multiplies them by weights (how important each input is), adds a bias (a small nudge), and gives you a number.

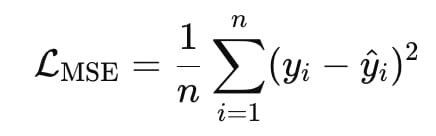

2️⃣ MSE Loss (Mean Squared Error)

In Plain English: “How bad were my guesses…on average?”

What It Actually Does:

It measures how wrong your predictions are, on average. It takes the difference between what your model predicted and what actually happened, squares it (so big mistakes get punished more), and averages everything out.

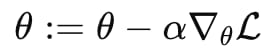

3️⃣ Gradient Descent

In Plain English: “Let’s tweak the knobs…carefully.”

What It Actually Does:

It adjusts the model’s parameters to reduce error. Imagine standing on a hill in a thick fog and trying to walk downhill without falling. You take small steps in the steepest downward direction.

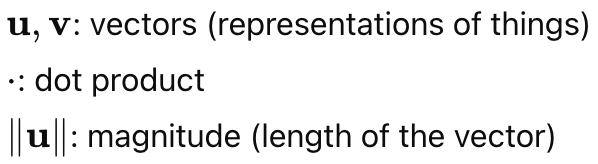

4️⃣ Cosine Similarity

In Plain English: “Are these two things pointing in the same direction?”

What It Actually Does

Cosine Similarity measures how similar two vectors are (u and v), based on their direction, not their size. Those || symbols in the equation mean the length of its vector. In ML, this is heavily used in text similarity, recommendations, and search engines.

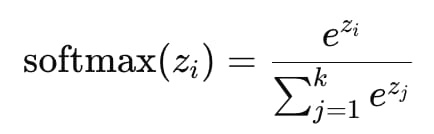

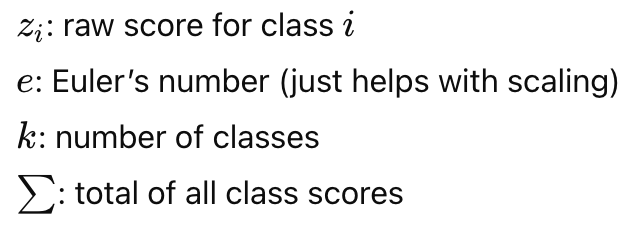

5️⃣ Softmax Function

In Plain English: “Out of all options…how confident are you in each one?”

What It Actually Does:

The softmax function turns raw scores (logits) into probabilities that add up to 1. For example, if a model says:

Cat: 12, Dog: 9, Toaster: 2

Then softmax translates that into something like:

Cat: 85%, Dog: 14%, Toaster: Please stop.

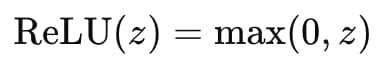

6️⃣ ReLu Activation

In Plain English: “If it’s negative, ignore it.”

What It Actually Does:

ReLU activation keeps positive values, while deleting negative ones. If the number is greater than zero → keep it. If it’s negative → nope.

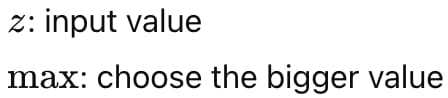

7️⃣ Forward Propagation

In Plain English: “Let’s see what the model thinks.”

What It Actually Does:

Forward propagation moves input data forward through the network to produce an output.

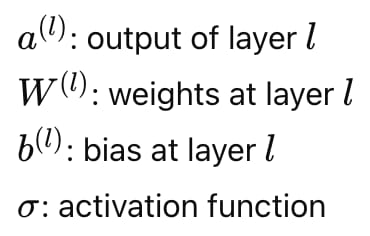

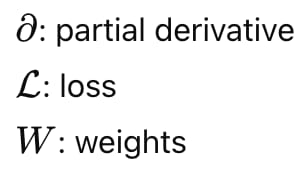

8️⃣ Backpropagation

In Plain English: “Who messed this up, and by how much?”

What It Actually Does:

Backpropagation calculates how much each weight contributed to the error, so the model knows what to fix.

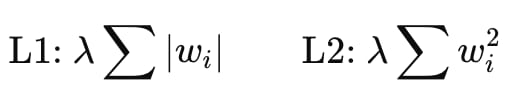

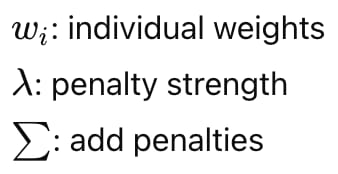

9️⃣ L1 & L2 Regularization

In Plain English: “Relax. You’re doing too much.”

What It Actually Does:

L1 and L2 Regularization prevent the model from relying

⭐ Conclusion

At the end of the day, ML math is not about being scary — it’s about being precise. Every formula boils down to:

Make a guess → Measure how wrong it was → Adjust → Repeat → Try not to overfit your personality.

And that’s the real formula.