Drum roll please…

You’ve made it to the season finale of Supervised Learning’s Got Talent!

We’ve had a line-up of mega-talented stars, like last week’s Naive Bayes.

Now its time to roll out the red carpet for our final star of the season. This final contestant is a bit of a superhero. Someone who really knows how to slice through the chaos.

Introducing the mighty Support Vector Machines!

Support Vector Machines: Because not all heroes wear capes…some just draw epic margins.

🦸♂️ Support Vector Machines (SVM) - “The Data-Slicing Superhero”

Imagine you have a bunch of data points — some are cats 🐱, some are dogs 🐶 — and your job is to draw a line that separates them as cleanly as possible.

Now, if you’re like most algorithms, you might draw a line that kinda works.

But “kinda” is unacceptable for SVM.

SVM puts on its superhero suit, surveys the battlefield and says: “I will draw the best possible line that leaves the most breathing room between the two classes.”

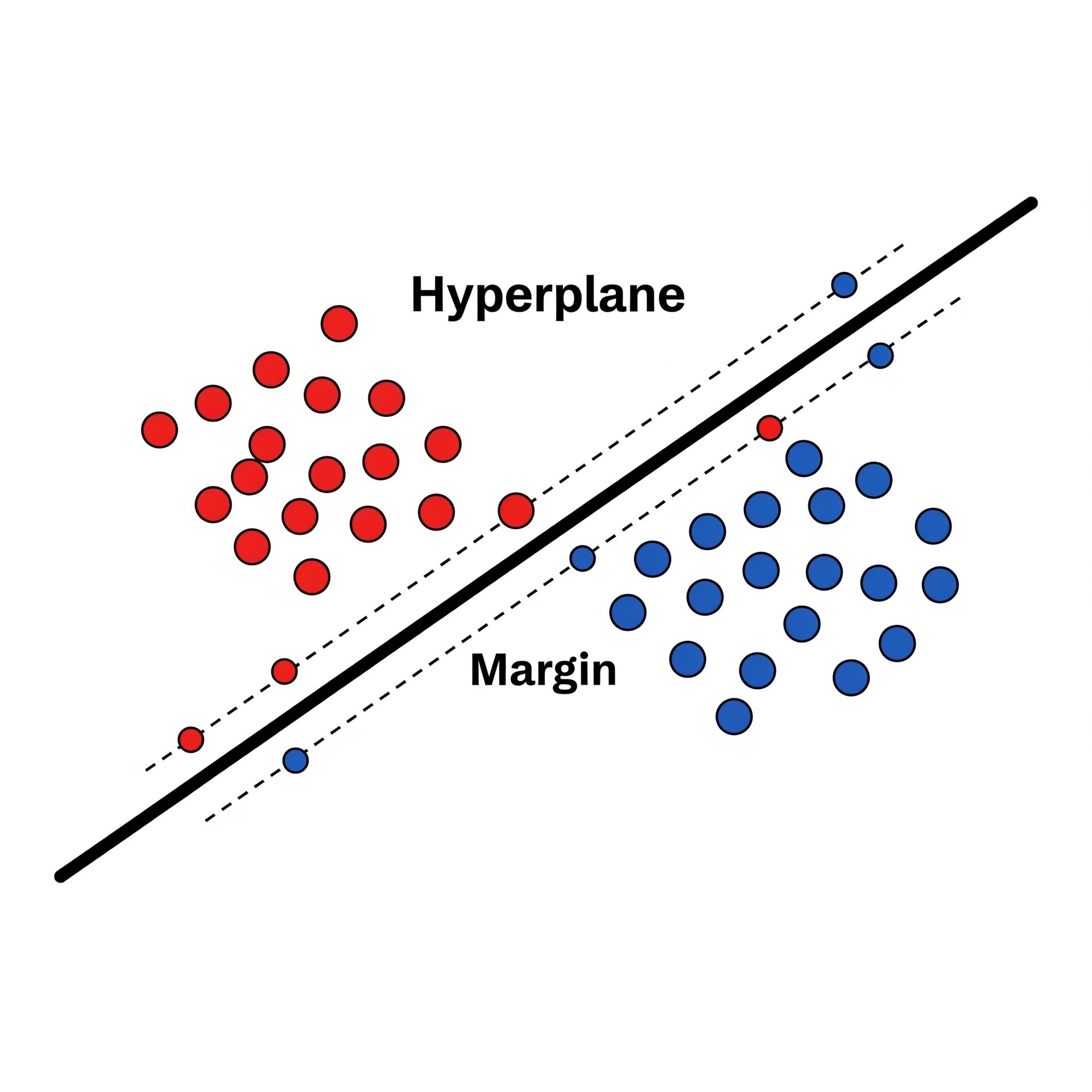

That magic line is called a hyperplane. The breathing room is called the margin.

SVM separating red and blue more effectively than a presidential election.

🤜🤛 The Dynamic Duo: Hyperplane & Margin

✈️ Hyperplanes

In SVM-speak, a hyperplane is just a fancy term for the decision boundary — the things that separates your data into classes.

In 2D, it’s a line.

In 3D, it’s a plane.

In higher dimensions, it’s called a hyperplane (because our human brains can't visualize it, so we slap “hyper” on it and move on 😅).

┆Margin

The margin is the “wiggle room”, or the breathing space I mentioned above. Basically, its the space between the hyperplane and the closest data points (called Support Vectors) from each class.

The goal of SVM is to maximize the margin. It finds the largest possible buffer zone on either side, which is what makes is so effective.

Basically, SVMs are like a restraining order for data.

🪄 Kernel Trick

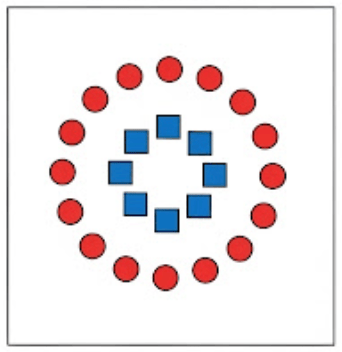

Now, if you’re keen, you may have asked yourself: “But what if my data isn’t easily separated by a straight line?”

For example, how the hell would I separate something like this with a straight line?:

Not an IQ test. We just need SVM to the rescue.

Well, usually a lot of superheroes have a secret weapon. SVM is no different…its secret weapon is called the Kernel Trick.

Looking at the image above, you can’t draw a single straight line to separate the red and blue dots without crossing into the wrong territory. We also don’t want a curvy line; that would be messy and complicated.

Instead the Kernel Trick uses a “magical” function to project your data into a higher dimension where a straight line is possible!

That’s some Doctor Strange level of cool.

SVM: “Can’t draw a straight line? No worries, I’ll just conjure a new dimension with the Kernel Trick so you can.” *mic drop

The reason why it’s called the Kernel “Trick”, is because it uses a simple calculation on the original, low dimensional data that provides the same result as a much more complex calculation would in a higher dimension, without ever having to perform the difficult dimensional transformation.

That’s one hell of a hack.

To cover how it works exactly will be beyond the scope of this post, but no worries, I will cover exactly how it works in the future.

✅ Advantages & Disadvantages

SVMs are like a super-powered sorcerer. They are powerful and effective in high-dimensional spaces, making them awesome for complex datasets.

They are also efficient with memory, because they only need a small subset of the training data (the support vectors) to build the model, and the use of the Kernel Trick allows them to solve both linear and non-linear problems with ease.

But…

Even the most powerful superheroes have weaknesses.

SVMs can be computationally intensive and slow to train on very large datasets. They are also sensitive to hyper-parameters and the type or kernel (yes there’s different types!).

So like everything else, SVMs shines depending on your use case!

⭐ Conclusion

And that’s a wrap for our first season!

We’ve journeyed through our array of contestants that provide the power behind Supervised Learning. And it wasn’t even all of them!

But as with all learning, sometimes we need a short break every now and then. This may be the end of our first season, but I’ll bring you even more algorithms to go over.

Be sure to check out all the previous episodes, in case you missed any of it!

Stay tuned for next week, because we’re going to kick off a brand new series, and dive into the one language that makes all this magic in ML possible: Math.

Time to epoch out!

P.S., What did you think of our space-maximizing Support Vector Machines? Rank below: